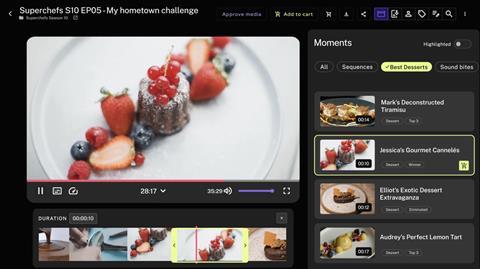

The AI generates humanlike descriptions of video content, breaking them down into meaningful scenes

Tedial has integrated Moments Lab’s MXT-2 AI indexing technology (which generates humanlike descriptions of video content) into its EVO MAM.

MXT-2 (pictured above) breaks videos down into meaningful scenes, recognising who’s in them, what’s happening, where it’s taking place, and what kind of shots are used.

It can also identify the best soundbites from interviews, speeches, or press conferences.

Follow AI Media News on Linkedin here, and X here, and sign up to its weekly newsletter here.

Philippe Petitpont, CEO and co-founder of Moments Lab, said: “Partnering with Tedial enables us to connect with more customers who are looking for next-generation MAM with the latest multimodal AI capabilities. Together, we are setting a new standard for media asset management, ensuring our clients have the tools they need to succeed in a rapidly evolving industry.”

“We are excited to partner with Moments Lab and integrate their advanced AI solutions into our EVO MAM platform,” adds Julián Fernández-Campon, CTO of Tedial. “This collaboration enhances our ability to provide innovative, AI-powered media management solutions that meet the evolving needs of our customers.”

No comments yet