Before we captured the great man as a hologram, we first needed to test the technology on his trademark shirt, says John Cassy

Production company Factory 42 in association with DRI, Talesmith, The Mill and Alter Equals

Commissioner Neil Graham

Length 45-60 minutes

TX Available via the Sky VR app from mid May

Executive producer John Cassy

Director Dan Smith

Head of production Ruth Sessions

Photogrammetry Nicolas Galan; Fabian Micalef

Art director Laura Dodds

Lead game designer John Foster

Technical lead Richard Bates

Interactive supervisor Dave Ranyard

Sir David Attenborough has been to some of the most exotic places on the planet, usually dressed in a signature pale-blue linen shirt.

Almost a year ago, three of these trademark shirts were on an unusual mission of their own, carefully stowed in some hand luggage on a flight to Seattle. They were to be part of a very special technical filming test in a windowless high-security green screen room at Microsoft’s global headquarters.

The reason? They were to be used to establish whether the technology was ready for the great man to fly to Seattle in the next few weeks to be captured as a photo-real, moving, three-dimensional hologram.

The process involves an array of 106 cameras pointing in on the subject and is so cutting edge that even the tones and potential creases on his shirt had to be tested in case they caused visual glitches and shattered the illusion of realism for viewers.

To avoid this, our director Dan Smith was tasked with donning one of the shirts we had carefully couriered to the West Coast and then delivering a piece to all 106 cameras. Thankfully, the shirts – and Dan – passed and our ambitious project was under way.

Groundbreaking VR

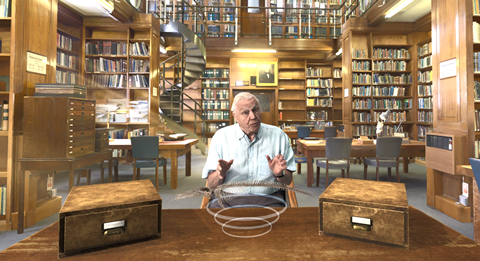

The holographic Sir David was to be the centrepiece of Hold The World, Factory 42’s groundbreaking virtual-reality experience for Sky VR.

Once successfully captured, he would be dropped into a virtual representation of real spaces at London’s Natural History Museum so viewers could have a one-on-one audience with him and some of the museum’s most fascinating objects.

It also marked another first in Sir David’s 60-year-plus career of innovation in broadcasting, which has taken him from black and white through the introduction of colour and every technical advancement since, including HD, 3D, 4K and VR.

A few weeks later, Sir David was in the Seattle studio, shirt collar safely glued down and having been subjected to an intoxicating amount of hair spray.

Instead of the rare creatures that the viewers would see, there were paper cups turned upside down and positioned to give him an idea of where the viewer would be and where computer generated objects would later appear.

Quietly motoring away behind the green screen were servers processing raw video being captured at an astonishing rate of 10GB of data per second.

Once cleaned up, the selected takes were transferred back to London and dropped into Unity, the computer game engine, to create a vividly real representation of Sir David inside the Natural History Museum.

The rooms where he ‘sat’ were captured using photogrammetry, a process that involves hundreds of pictures being taken of the real rooms at the museum and then reassembled into accurate 3D models using special software.

Driving that process was Alter Equals, an amazing start-up based on an anonymous industrial estate in Battersea, south London.

Knitting it together and creating the code that drove the interactivity was our game developer partner Dream Reality Interactive. Designers, artists and coders spent months creating an intuitive interactive experience.

An extraordinary process of learning and collaboration tested the resourcefulness and patience of all involved.

Creating a piece of content that sat somewhere between a TV documentary and a computer game forced everyone to question and adapt trusted ways of working; both TV producers used to a linear production model and computer coders steeped in an ‘agile’ development approach.

The final product gives users the chance to handle extraordinary creatures that then come to life, including a stegosaurus, a flea of the type that caused the bubonic plague, a dragonfly and, of course, the incredible blue whale skeleton that hangs from the ceiling of the museum’s main hall.

John Cassy - My tricks of the trade

-

Realise that ‘world firsts’ are what they are. No one has ever done them before, things will go wrong, so build capacity into your budget and schedule.

- Remember, it is only telly (or VR).

- Remember that everyone generally wants to make the best project possible – even if they are driving you mad.

- With software, think of a budget then double it.

- When shooting with cutting-edge tech, plan, plan and plan again. It’s expensive stuff and you can’t afford to get it wrong.

Our creature models were created in partnership with the CGI gurus at The Mill using data and textures that the museum’s in-house scanning department captured from real objects.

Everything had to be scientifically accurate and the technical and creative challenges were endless. They included juggling the limited ‘budget’ of polygons (effectively resolution) available in any scene to maximise rendering on areas where viewers are most likely to focus, such as Sir David’s face, without running the risk of overloading the VR headset.

The same applied to the CG used for the creatures. Things that are simple in TV such as frame rates, edits and even synching music proved problematic.

It then all had to be redesigned and optimised to run on mobile phone-driven VR headsets, not just the powerful PC-driven VR units for which it was originally built, like HTC’s Vive and Facebook’s Oculus Rift.

But finally, after 12 months in development and another year in production, we reached another Attenborough landmark. And barely a hair looks out of place.

GETTING INTO THE MINDSET FOR INTERACTIVE VR

Dan Smith - director

It’s easy to become conditioned to the long-accepted methods of TV creation: filming, editing, grading and so on. For Hold The World, some self analysis and a big change of mindset was required. We were placing TV content into interactive VR, which is a whole different ball game.

With TV, you film it, edit it, render it and then an obedient viewer watches it play over a fixed period of time on a rectangular device on the other side of the room.

Hold The World is not only a 360-degree world but is delivered in a ‘game engine’, so the computer renders it on a millisecond-by-millisecond basis as the disobedient ‘user’ or ‘player’ (not viewer) decides what they want to do, and when they want to do it. That could mean they pick up a fossil, enlarge it, stare at the floor, stand on their head, whatever.

This interactivity and ‘real-time rendering’, which is totally normal in video games, comes as a bit of a shock for TV makers (well, it did for us). The key was making decisions from the ground up at an early stage to allow for the technological restrictions of the new medium.

Writing the script, we had to think of everything that a user might do and make sure it worked in whichever order they might choose to do it.

TECHNICAL LIMITS

We knew that today’s CG-savvy audiences wouldn’t tolerate the relatively low-detail CG required by the real-time game engine. It seemed that hair, feathers or anything with a high level of detail in the geometry would be overambitious, so we only scanned and modelled objects that were fossils, made of bone, or had smooth surfaces, like insects.

The most difficult part of any specimen was the slightly fluffy texture on a butterfly’s belly. We also noticed that when users moved left and right of the hologram of

David, which always looks straight ahead, the lack of eye contact could be off-putting. Once again a simple decision at an early stage – to make Hold The World a seated experience – solved the problem.

No comments yet