Dinosaurs In The Wild is a hugely ambitious project that has kept Milk VFX busy for 18 months. It has created a huge range of 6K VFX shots, incorporating the latest palaeontological knowledge, for an immersive theatrical theme park-style experience that takes visitors back 67 million years to the time of the dinosaurs

A team of 60 artists at Milk VFX worked on Dinosaurs In The Wild at any one time. The ‘experience’ started at NEC in Birmingham earlier this year before moving to Manchester EventCity, followed by a move to the Greenwich Peninsula in London next year.

The scale of the VFX work on the production is astonishing, as Milk’s team explains to Broadcast TECH.

JC DEGUARA, VFX SUPERVISOR

It’s the biggest project I’ve ever worked on. We generated all the scenes you see out of the windscreen of the car that drives you through to the base station, as well as everything you see from the windows of the base station once you arrive. All the time, you’re surrounded by dinosaurs.

We went on a recce that took us from Seattle to Oregon in search of a suitable area for the drive, and for the backgrounds for the views from the base station. We ruled out anywhere with sand dunes due to the challenges of tracking such environments.

Eventually, we found an area that had been an Indian settlement, which looked like it would give us a five-minute route from our starting point to the base station, across an ideal-looking environment, including a river. As soon as we saw it, we knew it would work.

We initially went out and did a 3D scan of the whole terrain, which covered two square miles. We then put pre-viz models of the dinosaurs into this 3D environment, to map out how the story could unfold as the vehicle makes its way to the base station.

When you go into the base station, you look out at all the dinosaurs through four large ‘windows’. These are actually huge screens showing four eight-minute films. The story arc works from window to window and it’s timed so that major things happen in different windows at different times.

The director, Walking With Dinosaurs producer Tim Haines, worked up the story line using the pre-viz, while we sat at Milk and talked through it all.

When we went out to do the real shoot of the background plates, the extensive pre-viz meant we only had to make a few minor tweaks before we got what we needed. We shot for three days in 6K stereo on Red cameras. We shot plates above too, compositing them to make a 6,000 x 7,000 pixel image to match the proportions of the windows.

MILK - WHAT IT DID

● Created 4 x 8-minute CG dinosaur sequences for four ‘fake’ observatory viewing windows in stereoscopic 3D.

● Created a 1 x 4-minute ‘windscreen view’ for a simulated drive sequence, also in stereoscopic 3D.

● Processed 77,760,000 frames of effects

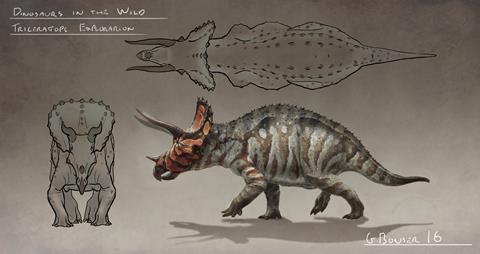

● Worked closely with UK palaeontologist Darren Naish and event creator Tim Haines to create and develop eight species of dinosaur.

● The animation was extensively pre-vized to ensure 360-degree continuity of dinosaur action across each of the four viewing windows.

● Tracked a four-minute safari ‘drive’ sequence across the plains to the dinosaur observatory research centre.

● Upgraded its pipeline to accommodate the sheer scale of the project, from fur and feather workflows to publishing systems. ● Software used was Maya, RenderMan, Yeti, Nuke, Ocula, Houdini, 3DEqualizer, Golaem Crowd.

● Approx 60 artists worked on the project.

The car is on a gimbal so you feel the bumps and sharp movements when a dinosaur runs across the roof. We put cracks, dirt and dust on the screen to enhance the authenticity as you look out of the window. We portrayed the dinosaurs as scientifically accurately as possible based on the latest thinking about how palaeontologists believe they looked.

We worked closely with Haines and palaeontologist Darren Naish on all the details. The Velociraptors have full plumage, the Tyrannosaurus rex has more feathers and fur on it than you might expect and its muscle system is much more stocky, like an alligator.

We set up a series of folders containing references for each dinosaur. Haines worked with our concept artist to update and enhance the details of the references, including the bone structure and the muscle system, and we worked to incorporate these into each model. He then came in and looked at every part of each dinosaur. If there was anything he wasn’t completely happy with, we’d go back and re-do it.

Animation becomes more complex the longer it’s on, so when it’s continuous for eight minutes, it becomes very difficult. We added a few ‘cheats’ to make the animation a little easier, including a dead dinosaur getting pushed out of the scene so we no longer had to include it in the ones that followed.

As well as incorporating all the dinosaurs, we added mountains and had to replace a lot of the grass with CG elements to make the stereoscopic work a little easier.

To add to the realism of the experience, a man inside the base station building with you steps out to help rescue some people from a vehicle, he then appears on the screen as if he’s outside with the dinosaurs.

Each window has dinosaurs really close up, so there are key scenes for which a lot of intricate, detailed work was required. It ends when the T. rex gets right up close to the glass and starts to bash away at it until it appears to crack it. At this point, the alarms go off and everyone is ushered out of the base station.

It’s all bespoke animation and no walk cycles are used. It’s a proper story, moving from window to window, which made it much more complicated. There were some very late nights and a lot of hard work. But the result feels natural and I’m very proud of it. It’s a huge achievement.

CHRIS HUTCHISON

ANIMATION SUPERVISOR

We had a scientist with us who had a good idea about how each dinosaur moved and we looked at a lot of reference material.

We had a rough storyboard of what would go on in each window – there was a hero character in each one and we knew their key moments and the beats we needed to hit, based on what was in the pre-viz. It was like choreographing a ballet.

We couldn’t recycle walk planes in each window as they had different slopes. So we had to adapt all the walks to each different territory – there was no cutting corners. The scale of it was insane.

It is very satisfying when, after all the hard work, you see renders and they are really, really good. This pushes you on. Every department pushed every other department. No one wanted to let anyone else down.

I really enjoyed the experience when I saw it at the NEC in Birmingham. Hearing it with the audio really blows you away. And seeing how people reacted, with the actors, the sets and the props, you get an appreciation for it all over again.

DARREN BYFORD

CG SUPERVISOR

The rendering was a monumental task. We set up a production line so when shots started coming through we could process them as quickly as possible. That was the plan, at least. It was pretty streamlined; everything that could be automated was automated.

A lot of time was spent finessing details like dust, debris, sand, water, blood, dents in the sand, bits of creatures, the trees and the

CG blades of grass, as these really add to the integrity of the images. We used cloud-rendering, which no one had done on this scale before. There were hundreds of thousands of jobs in a single render submission, so even a 0.1% error rate equalled a lot of frames.

We were rendering so much that errors could really stack up, so we wrote a tool to auto-check all the frames. It would create a list of all broken frames and enabled us to push a button to re-submit them to the renderer.

We also made a ‘creature loader’ that grouped creatures into herds. If the different herds were too close, we had to render them separately, but we could render seven of the same creatures at the same time. It’s about making even the weakest link as good as it can be, so even the background creatures look great.

DAVE GOODBOURN

HEAD OF SYSTEMS

Our cloud-rendering requirements meant we used a big proportion of Google’s machines in Belgium. We have a dedicated direct connection to Google through

Sohonet, and it worked pretty much the same as if the machines were on our network. The render performance was as if the machines were here.

The cloud solution enabled the project to happen. Using the cloud almost makes it a level playing field in terms of computing resources. Then it’s down to the talent.

Broadcast TECH Sept/Oct

Milk VFX unveils the huge range of VFX shots it created for Dinosaurs In The Wild

Currently

reading

Currently

reading

Bringing dinosaurs to life with cutting-edge VFX

- 2

- 3

- 4

- 5

No comments yet