The third entry in the series examines how departments can work together across the post-production workflow

In addition to several users co-operating within one software system, new technologies enable enhanced collaboration between departments. This is not only necessary to meet the growing demands around image quality, but the rich metadata provided by production is also very useful in the final product. Digital media libraries can utilise this metadata and apply their search or suggestion algorithms on a broader database – utilising keyword machine learning. This means that workflows in post-production must be adapted to ensure metadata is passed through to the final deliverables.

SWR’s image post-production in Germany tackled these challenges in 2021, working alongside Qvest and FilmLight. In addition to the colour pipeline and IT systems – which we examined in parts one and two of this three-part series – the company’s workflows and general collaboration were also thoroughly examined.

In this context, the co-operation of locally distributed stakeholders is also becoming increasingly important. Streaming solutions have been used for some time in the sound or editing sector to integrate external collaborators into the creative process via remote review, but the image quality of the solutions used is not sufficient for colour-critical assessment.

With ever-increasing internet bandwidths, securely encrypted communication and ever-improving end devices, remote viewing is also becoming possible in image-critical post-production. But here, too, it is not just about sending the colourist’s ‘live’ image to an external viewer.

Set and post-production

The move from linear to on-demand distribution has seen a shift in power from programme directors to consumers, who now decide what is broadcast at prime time. In addition, the range of media content is growing steadily and the look of the content plays a significant albeit subconscious role in the consumers’ selection. The look not only differentiates a programme, but also conveys value and can classify programmes by theme and tonality.

The collaboration between cinematographers and colourists is crucial to create a distinctive and consistent look. Early look development can begin before shooting and a stringent yet simple and robust on-set visualisation can aid all disciplines with their creative decisions. This early collaboration also dramatically increases efficiency in post-production, as set and post-production view the image data similarly.

There are several ways to create the look pipeline between set and post-production. In addition to traditional workflows such as CDL +LUT , there are mature standards such as AMF or BLG . Each of these methods has its advantages and disadvantages. Therefore, as every shoot has its unique requirements, it is vital that post-production can offer a variety of standards.

All these workflows have one thing in common – they are based on a scene-referred colour workflow . Thus, the look is agnostic to camera and playback format. In abstract terms, the look development defines the ‘colour process’ of the project.

Everyone involved in a production must see this collaboration as an added value rather than additional work. If implemented correctly, the interaction between set and post-production increases efficiency for both areas. Furthermore, only consistency between look development, visualisation on set and execution in post-production allows for the robust creation of fresh and bold looks for productions. This is because it ensures that all decision-makers are reviewing the images with a look that is independent of monitor or transcoding. Even if this look is not yet final, it progresses in the desired direction and eliminates unproductive discussions about why the images look different than with the camera manufacturer’s standard process.

Editing and post-production

With regards to the post-production workflow, the question always arises whether an offline or a purely online workflow should be chosen.

Offline means that the creative editing takes place in reduced quality – ‘offline’. Afterwards, only the material used in the cut is processed in full ‘online quality’ for the final output.

This path has several advantages. Editing requires significantly less storage space and network infrastructure, and less powerful workstations. At the same time, maximum image quality can be achieved in the final product. More complex drama productions usually utilise this workflow and this is not expected to change in the foreseeable future.

It is crucial in this workflow that the metadata of the offline clips matches the camera originals or transcoded online clips. The creation of the dailies must be in sync with post-production. SWR, therefore, decided to use Daylight, FilmLight’s dailies software. Daylight reads the metadata of the camera originals in exactly the same way as Baselight (the colour grading system at SWR). If the offline rushes are created with Daylight, you don’t have to worry whether the relink to the online files will work afterwards. In addition, Daylight and Baselight share the same toolset and colour management and so the look development can be carried over one to one. In this way, a production can create nice-looking dailies for editorial. If rushes are colour corrected, this correction can automatically be transferred to the final timeline as a starting point. In contrast to lookup tables, the grade remains editable at all times. If one decides to work with dailies in standard definition (SDR ), these can be encoded with established lightweight 8-bit codecs. This workflow allows you to create a high dynamic range (HDR) version with the same look later on.

However, the offline-online route is not ideal for many time-sensitive productions in the broadcast environment, as it is more complex and slows the workflow down. Online workflows are often better suited in these cases, especially for news and other live productions. But UHD and HDR do place significantly higher demands on the codecs and infrastructure.

In online workflows in the broadcast environment, it is common practice to transcode all camera data into a uniform data format. This is often determined depending on the editing system in use. Not every editing system can decode all codecs with the same real-time performance, but real-time is essential in editing. If you want to preserve the full dynamic range of the camera material and implement a scene-referred colour workflow, you are also faced with the challenge of colour space conversions. Online footage intended for scene-referred workflows and HDR output should be stored with at least 10 bits, ideally even 12 bits of precision.

When considering colour space conversions, there are two solutions. You can either commit to always transcoding into the native log-encoded colour space of each camera manufacturer, or convert the colours into one uniform ‘house standard’.

If you choose the native colour space of the camera, different colour space conversions must take place in the edit for different camera types, and this information must also be passed to colour grading. Thus, this option can be rather complex.

If you cannot ensure a shot-based metadata pipeline, it makes sense to transcode the source material from the cameras into a house standard – one common scene-referred colour space. This makes colour correction easier too, with long flattened sequences exported from editorial. In the edit, users need to apply only one consistent colour space conversion for pre-viewing. For this option, you must also provide a solution to robustly convert non-scene referred material, such as archive clips and graphics, into the scene-referred house standard.

These conversions can be performed in the editing software via LUTs, but the creation of these LUTs is non-trivial . Of course, there is also the question of which colour space is most suited as a scene-referred house standard. Unfortunately, this crucial point has not been given much attention by the standardisation committees so far. As of 2023, there is no ITU-defined scene-referred encoding colour space that would be suitable for practical use . SMPTE FS-Log / FS-Gamut is the first approach in this direction, but as it does not improve the underlying task of reducing complexity, it has not yet found practical application.

When handing over an online project from editing back to colour grading, there are three options. You can export a cut list to conform to the online footage. However, this comes with the disadvantages of the offline workflow. The conform can be faulty and any complex effects that the grading software cannot translate automatically have to be recreated manually.

The second option is to export a long continuous sequence from the editing system. Then all effects are baked in, and synchronicity is guaranteed. The online sequence must be chopped up for colour correction using scene detection or, better still, an EDL. The EDL brings the added advantage that shots from the same camera card or the same camera clip can be grouped automatically using metadata from the EDL. The software can automatically assign input colour spaces to different cameras with the help of Media Import Rules.

The third option is a metadata-based transfer of colour correction to the editing system. This requires the colour correction system to be available as a plugin in the editing system . No new files need to be rendered, which makes this workflow very fast, flexible and space-efficient on the storage. The colour grading travels back to the editing system using metadata, for example, embedded in an AAF file or via a network connection. There, the appropriate colour grade is applied frame-accurately by the plugin to the uncorrected material. The project thus remains flexible in editing and even colour adjustments until the last minute. Clips can be extended, including temporal effects , and the colour grading can still be adjusted in the editing system at short notice. Even effects from the editing timeline do not have to be manually recreated in the colour grading, as the final rendering takes place in the editing system. This workflow is particularly valuable for productions that require this flexibility or that rely heavily on effects plugins and split screens, which would otherwise generate a lot of additional work in the colour department.

VFX and post-production

The proportion of shots enhanced by VFX is steadily increasing, not least because VFX can heighten the visual experience and make stories more believable. In VFX production, a physically plausible appearance is often desired to make the achieved effect as realistic as possible. Scene-referred media assets are particularly suitable for this purpose because they record and provide unaltered light energy from the set.

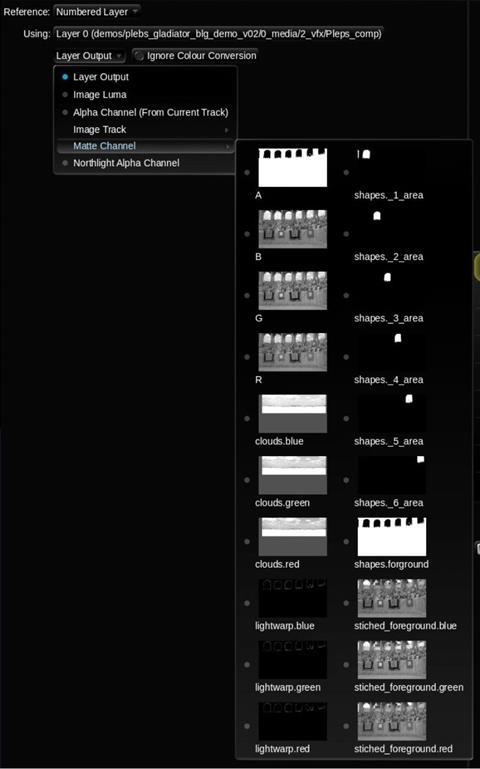

In addition to the scene-referred source files, a pre-visualisation of the final look is essential for VFX work. Only in this way can the VFX team ensure that the effects still look plausible after they have been processed through colour grading. Again, there are different methods to transfer colour management and grading information from the colour department to the VFX system.

The de facto standard for describing the colour pipeline is OpenColorIO (OCIO). Via OCIO, entire colour pipelines, including CDL and CLF (Academy Common LUT Format), can be communicated in a standardised way. The synchronisation between VFX and post-production is then simplified.

For complex corrections involving spatial operators, metadata-based workflows can also be used, provided that a native colour grading plugin is available in VFX.

One should not forget the route back from VFX to colour grading. This is because assets such as masks and passes are often created during VFX processing and can be of high value for colour correction. OpenEXR has established itself as the de facto standard here. The OpenEXR file format allows the transfer of unlimited masks, channels and metadata from VFX to colour grading. It is ideal for encoding linear scene-referred colour spaces and offers various lossless and lossy compression types.

The more information available in colour grading, the fewer iterations a VFX shot has to run through. The colourist can treat the final result more precisely by using additional channels. Again, a well-established pipeline ultimately increases the efficiency of the entire post-production process.

Remote viewing

External viewers are invited to attend the colour grading session or screening for a good reason; they typically have feedback to give. Therefore, the feedback channel back to colourists is just as important as the live image itself.

However, viewers are not necessarily familiar with complex user interfaces. Therefore, a straightforward but powerful interface is essential.

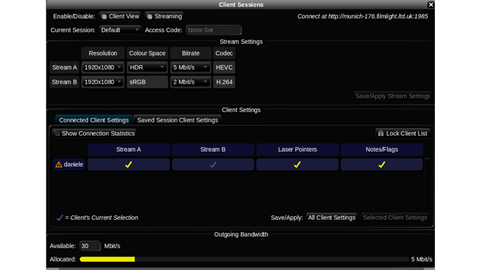

Every grading system at SWR has the ability to stream to external viewers. The viewers do not need any additional software installed because the viewing app runs natively in a web browser.

Faithful colour reproduction in SDR and HDR is guaranteed, with the web browser reading the display’s information and requesting the appropriate stream from Baselight. This also makes it possible to offer different streams to different viewers optimised for their respective display devices. A viewer on a standard computer monitor under Windows receives an sRGB stream. Another viewer on an iPad or Apple MacBook Pro gets an HDR stream. In addition to the pure assessment of the image, the viewer can also enter metadata in the form of flags and comments directly into the grading system. This metadata appears instantly in the grading system, and the colourist can access, comment and process it immediately. This eliminates the need to maintain or synchronise notes in another system.

The Client View web app allows the viewer to navigate freely in the grading timeline, detached from the colourist. This allows a VFX supervisor to check the status of the VFX versions, for example, or cinematographers to review other scenes while the colourist is working on a complicated shot. Different UI views are available to the remote viewers.

In addition, the viewer’s mouse cursor position in the live image is optionally sent back to the grading system as a virtual ‘laser pointer’, which simplifies communication between remote viewers and colourists.

It is valuable for colourists to allow the settings for each participant in the session to be configured in a detailed way. In addition to technical parameters such as resolution, colour space and bit rate, the colourists can set privileges like laser pointers for each viewer individually.

Colour grading is a creative process that can easily be disrupted by technical distractions. A poor internet connection or a weak device at the viewer’s end will undoubtedly affect the process. The colourists, therefore, are provided with a detailed view of each connected user’s technical experience. The software logs dropouts, reconnects, latencies and playback problems and warns the colourist about suboptimal conditions for each viewer. The colourist can react and assign a lower resolution or reduce the bit rate to that viewer, for example, to ensure the creative process is not disturbed.

We can observe the principle of emergence here again. The external observer becomes part of the overall system. This creates functionality that did not exist before.

The connection between Baselight and each web browser is realised via reflectors. The FilmLight Cloud thus only communicates with one trusted and constant counterpart on the internet. The content is transmitted to all viewers via the reflector. TLS encryption secures the connection between the browser and the reflector. Furthermore, the image content is transmitted with SRT encryption . Therefore, the technical hurdles for in-house IT are manageable.

Conclusion

The complete digitalisation of all production steps allows different departments of a production process to dovetail more closely and effectively. With adequately set up and deep integrations between departments through open and proprietary interfaces, more time can be spent on the creative processes. Also, creative decisions become more meaningful because the basic assumptions are in agreement. Through these collaborative approaches, creative decisions are preserved and do not have to be repeated in a cumbersome manner. Efficiency, creativity as well as image quality all increase.

Daniele Siragusano is a workflow specialist and image engineer at FilmLight. Siragusano is instrumental in developing HDR grading and colour management tools within Baselight.

Andy Minuth is a colour workflow specialist at FilmLight, responsible for training, consulting and customer support. Minuth is also involved in the development of new features in Baselight.

No comments yet